Effective Ways to Find Degrees of Freedom in Statistical Analysis (2025)

How to Find Degrees of Freedom in Statistical Analysis

Understanding how to find degrees of freedom is essential for anyone engaged in statistical analysis. Degrees of freedom play a critical role in hypothesis testing, regression analysis, and many other statistical methodologies. Proper grasp and calculation of degrees of freedom can influence the validity of your statistical tests significantly. This article will explore effective methods for calculating degrees of freedom, their implications in various statistical contexts, and provide practical examples for clarity.

Understanding Degrees of Freedom in Statistics

The concept of degrees of freedom broadens the framework of statistical analysis. Generally, degrees of freedom refer to the number of independent values that can vary in a statistical calculation. The general formula for degrees of freedom is calculated based on sample size and other constraints present in the data. For instance, in a single sample t-test, degrees of freedom are computed as the sample size minus one (n – 1). This fundamental understanding is crucial as it ensures precise results when applying statistical tests.

Degrees of Freedom Formula

The degrees of freedom formula serves as the cornerstone of hypothesis testing in statistics. For various tests, the formula can differ. For a classic one-sample t-test, the formula is simple: df = n – 1, where n represents the total number of observations. Conversely, in a two-sample t-test, the degrees of freedom can be calculated using the following formula: df = n1 + n2 – 2, where n1 and n2 are the sizes of the two samples being compared. Recognizing the correct formula for your statistical method is instrumental in obtaining precise results.

Significance of Degrees of Freedom

Degrees of freedom are not merely abstract numbers; they carry significant implications in statistical interpretations. Higher degrees of freedom generally correspond to a greater sample size, thus increasing the reliability of test results. Conversely, low degrees of freedom often indicate a smaller sample and can lead to inflated confidence intervals or possibly misleading results. It’s essential to acknowledge how degrees of freedom affect critical values in hypothesis testing, particularly when determining statistical significance.

Common Misconceptions About Degrees of Freedom

Many students encounter misconceptions while grasping the concept of degrees of freedom. A prevalent misunderstanding is that degrees of freedom equate solely to sample size. However, this concept extends beyond mere sample counts and factors in constraints and unique conditions of the data analysis. For example, in regression analysis, correctness in determining degrees of freedom deals with the number of predictors as well as the total sample size. By addressing these common misconceptions, one can achieve a comprehensive understanding of statistical analysis.

Calculating Degrees of Freedom

Calculation of degrees of freedom can vary widely depending on the statistical test employed. Here’s a breakdown of methodologies used in different testing scenarios to demonstrate the various degrees of freedom calculations.

Degrees of Freedom in t-tests

T-tests are one of the most utilized statistical tests. For a simple one-sample t-test, calculating degrees of freedom is straightforward with df = n – 1. However, when conducting an independent samples t-test, calculations become slightly more intricate with the formula df = n1 + n2 – 2. Moreover, understanding the degrees of freedom in t-tests aids in correctly interpreting p-values and significance levels, ensuring accurate conclusions derived from the test.

Degrees of Freedom in ANOVA

In ANOVA (Analysis of Variance), degrees of freedom are categorized into three components: between-group, within-group, and total. Typically, the between-group degrees of freedom is calculated as k – 1 (with k being the number of groups), while within-group degrees of freedom equals N – k (where N signifies the total number of observations). Finally, the total degrees of freedom are determined as N – 1. Consequently, mastering the calculation of degrees of freedom in ANOVA is critical for effectively analysing variances in sample data.

Degrees of Freedom for Regression Analysis

In regression analysis, the situation becomes a bit more complex. The total degrees of freedom equals the total number of observations minus one (N – 1). The regression degrees of freedom are defined by the number of predictors (p), thus contributing to the formula df = N – p – 1. Mastering these computations allows for seamless regression analysis and precise model effectiveness evaluation. Understanding degrees of freedom in regression will significantly aid in interpreting R-squared values and significance tests for model parameters.

Practical Applications of Degrees of Freedom

Degrees of freedom are foundational in statistical parameters and methodologies, underpinning effective degrees of freedom analysis. Consider their applications in various hypotheses testing scenarios, which riot results for establishing reliability and significance.

Real-world Examples of Degrees of Freedom

Concrete examples enhance comprehension significantly. Let’s consider how degrees of freedom function in real-world analysis — for instance, in clinical trials where determining the effects of a drug involves comparing outcomes between treatment and control groups. A two-sample t-test would calculate degrees of freedom based on participant numbers, which ultimately influences the interpretation of results. These real-world examples of degrees of freedom are instrumental in fosterings comprehension of statistical reliability and significance.

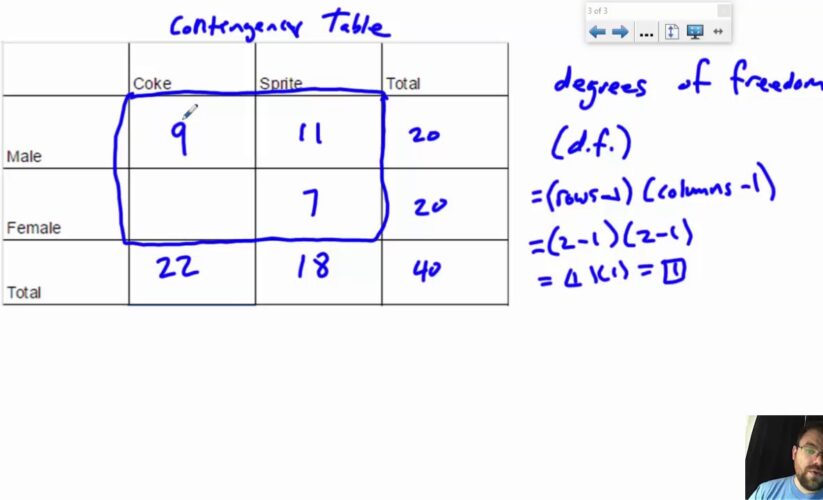

Visualizing Degrees of Freedom

Visual aids can remarkably simplify the complex concept of degrees of freedom. Flowcharts or diagrams can effectively represent calculations of degrees of freedom in different contexts, boosting educational experiences. Visualizing these concepts allows research students and practitioners alike to map out pathways and identify appropriate methodologies quickly. Using accessible and engaging representations of degrees of freedom in data analysis can ignite deeper understanding among students and professionals.

Foundation for Higher Statistical Learning

Understanding degrees of freedom lays the groundwork for advanced statistical techniques and methodologies, including Bayesian analysis and machine learning models. These branches require a firm grasp of degree calculations and their implications. A profound understanding fosters competent interpretations and analysis leading to advanced statistical exploration. Thus, educational resources on degrees of freedom play an indispensable role towards building substantial statistical acumen.

Key Takeaways

- Degrees of freedom are essential for accurate statistical analysis, impacting the validity of your results.

- Calculating degrees of freedom involves varied formulas depending on the test, such as df = n – 1 for one-sample t-tests and df = n1 + n2 – 2 for two-sample t-tests.

- Understanding the significance and implications of degrees of freedom is crucial for interpreting results and conducting reliable statistical tests.

- Visual representations and practical examples significantly enhance comprehension and application of degrees of freedom in real-world scenarios.

- Educational resources and real-life case studies can bolster grasp of advanced statistical techniques linked to degrees of freedom.

FAQ

1. What is the basic definition of degrees of freedom?

Degrees of freedom refer to the number of independent values that can vary in a statistical analysis. It plays a critical role in calculating test statistics such as t-tests and ANOVA. In simpler terms, it’s basically a count of the independent pieces of data that can influence the results of statistical tests, vital for determining the reliability of findings.

2. How does sample size impact degrees of freedom?

The relationship between sample size and degrees of freedom is inverse; as the sample size increases, degrees of freedom typically increase, leading to more robust and reliable statistical tests. Conversely, smaller sample sizes may result in higher variability in data analysis and less confidence in results, impacting the overall statistical significance.

3. Can degrees of freedom be negative?

Yes, degrees of freedom can be deemed negative when there are numerous constraints or if the sample size is inadequate relative to the parameters being estimated. While negative degrees of freedom aren’t common in typical statistics, understanding their occurrences in advanced analytical scenarios is crucial for accurate interpretation.

4. What role do degrees of freedom play in hypothesis testing?

In hypothesis testing, degrees of freedom in hypothesis testing help determine the critical values for statistical tests, affecting thresholds for making decisions. They play a vital role in defining the sampling distribution of the test statistic, ultimately guiding conclusions regarding the alternative hypothesis.

5. How does one calculate degrees of freedom for paired samples?

For paired samples, the degrees of freedom equal the number of pairs minus one (df = n – 1). This simple formula is crucial when assessing differences between related groups, ensuring that the results are statistically meaningful.